According to Encyclopedia Britannica, artificial intelligence (AI) is:

the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize or learn from past experience.

At its core, AI enables machines to perform tasks that typically require human cognitive abilities like learning from data, recognizing patterns and making decisions. Did you notice that that I used the term machine rather than computers in the previous sentence? Because AI models can be implemented/used on a range of machines including but not limited to computers, smartphones, robots, automobiles, etc. Using AI, machines strive to simulate the fundamental human intelligence as in think, learn and solve problems.

Various organisations, right from World Economic Forum and Niti Ayog to IBM and Google have their own definitions of AI, but with their own objectives and line of work in mind. Encyclopedia Britannica’s was the only one I found neutral, real and a good starting point for students like you. But I would encourage you to explore the various other definitions and try to identify the subtle differences.

Because, understanding AI is essential for you to step into a future where intelligent systems play a crucial role.

Now, the way the term AI is used interchangeably can often get confusing. AI is used to refer to

- theoretical concept of mimicking human intelligence

- technologies used to in developing AI systems

- algorithms used to develop AI systems

- models or software that use AI tech

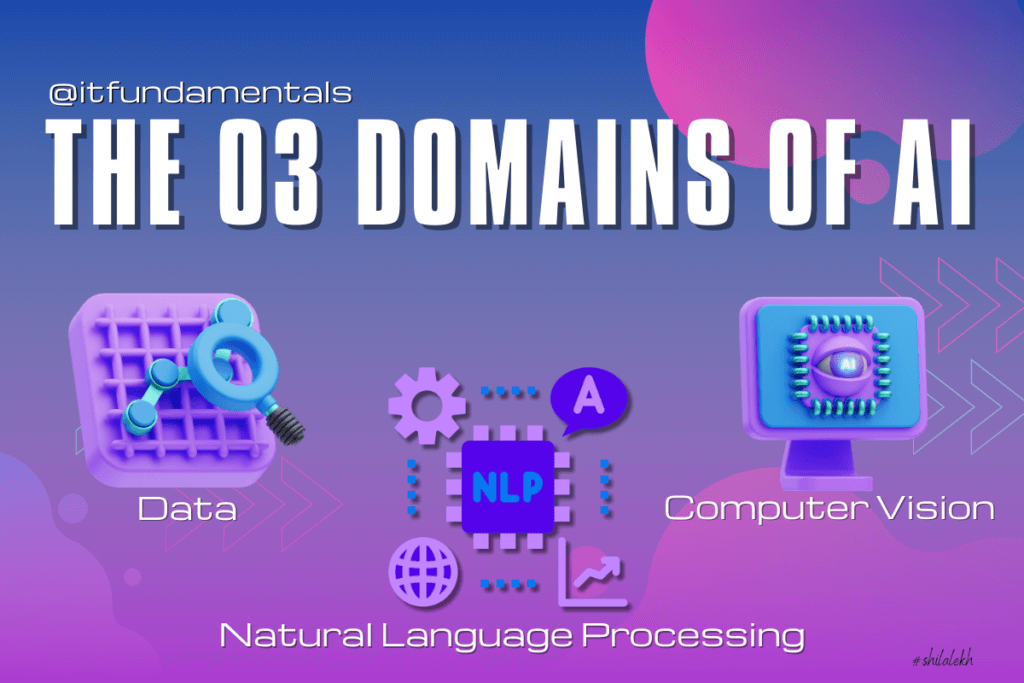

In this article we will be discussing the three core domains that are critical for an AI systems.

The 3 domains of AI

AI operates in these three core domains depending on the type of data it has to process:

- Data sciences: Handling and deriving insights from data.

- Computer Vision (CV): Enabling machines to interpret and act on visual information.

- Natural Language Processing (NLP): Teaching computers to understand and interact using human language.

Imagine you use an app to translate text into another language. The app processes your words using NLP, and if you add a photo of text for translation, it uses CV. The entire system relies on large datasets, which are part of data sciences.

Data: the foundation of AI models

Data forms the backbone of AI models or systems (throughout the article I will be using AI or AI models and AI systems interchangeably). It can be structured (like spreadsheets) or unstructured (like social media posts). Collection of large amount of data, organised in a way that makes it easier to process and analyse them is called a dataset. AI learns patterns by studying this dataset and makes predictions based on it.

In case you are wondering how AI “learns” when it’s a machine, let me tell you this: AI systems use data as input to various algorithms and the output (result of all the analysing and processing that the algorithms do) is what they “learn.” The algorithms used for this “learning” are called machine learning algorithms, or simply machine learning. And this learning is called training.

Here is a video I created on how machines learn.

Think of YouTube recommending next videos in the right sidebar on a computer. Have you wondered how it does that? It uses your watch history (data) to suggest content tailored to your interests. Every time you watch a YouTube video, you are training, or telling, the YT algorithm what types of videos you like. Sometimes it looks at data from users from your demographic (location, age, gender, education, ethnicity, etc.) as well.

How data fuels AI

Large amounts of data is collected, cleaned and analysed to train machine learning models. More data means better accuracy, as AI learns from diverse scenarios, and hence the need for large datasets.

Data needs to be cleaned before it is usable

Real-world data is often messy and can contain errors, missing values or irrelevant information, which may mislead AI models. Think of a Reddit thread as input data and you can understand how inaccurate or biased that data can be. Data cleaning ensures that the data is accurate, consistent and usable. It removes duplicates, outliers (extreme values that occur very rarely) or incorrect entries.

For instance, a dataset of customer ages might incorrectly include negative values or impossibly high numbers. These are obviously incorrect and/or impossible. So they are removed during the cleaning process.

Consistent data is easier and faster to process

Someone might write date in the “DD/MM/YYYY” format while someone else may write in “MM-DD-YYYY” format. Data is put into standardize formats, to maintain uniformity, before storage. Uniform data is easier and faster to process.

Incomplete data can give incorrect output

Sometimes collected data in incomplete, which can affect the output. The output may be biased or downright inaccurate. For example, if 20% of a dataset lacks gender data and you are trying to predict gender-specific preferences using the AI model, you will not get an accurate output. Or worse, a biased output.

When faced with inaccurate data, you (aka a data scientist) either remove the data completely or use statistical techniques (such as estimation and extrapolation) to fill the missing data points.

Data is analysed before it is part of a dataset

Data is typically collected with a project in mind. That is why it is necessary to ensure that the data ready to be part of the project dataset is relevant to project scope and can be used efficiently. Say, for a weather prediction model, storing data about the city’s population may be irrelevant.

Data analysis tools help you avoid storing repetitive and unnecessary information, which reduces project cost for storage. For instance, summarizing daily sales data into weekly trends reduces storage needs without losing insights.

This is also the phase where data is again checked for inconsistencies, missing entries or corrupted data. This helps identify issues that might have been missed during data cleaning.

That’s all you need to know for now about data needed for AI models. Let’s move to the next AI domain.

Computer Vision: teaching machines to see

Computer Vision enables machines to interpret visual data like images and videos. It involves tasks like object detection, image recognition and pattern analysis. Machines use algorithms to analyze visual input by breaking down an image into pixels and processing these to identify objects or patterns.

Facial recognition on smartphones is an excellent everyday use of computer vision. It compares your face to a saved image to unlock your phone. Let’s discuss the process in a little more detail.

Image processing to identify objects and patterns

Processing images to identify objects and patterns needs a systematic approach so that the machines can interpret and make sense of visual information. These CV systems are already trained on huge datasets to identify objects relevant to the task at hand.

Here is a broad step-by-step process that these models use to identify a new image:

- Image Acquisition: The first step in image processing is capturing images using cameras or sensors. For instance, the smartphone camera captures your face for for facial recognition.

- Preprocessing: The captured image is then pre-processed to make it manageable. We all know how large and difficult to handle an image can be! The most convenient format for storing images is grayscale. If retaining the colour of an image is not critical for providing output, it is converted to grayscale. However, if the colour of the image is important (for instance, the model has to predict how ripe a fruit is), the RGB colour is retained. Other techniques such as image normalisation are applied to make further computations efficient.

- Feature Extraction: Next, the AI model uses different algorithms to detect defining features such as edges, corners or textures. This is called feature extraction. For example, in traffic analysis, these features might help the system detect the outline of a car or identify a pedestrian.

- Segmentation: Next, the image is divided into smaller regions based on characteristics like color, intensity or texture. This helps isolate objects of interest from the background, such as separating a person from the surrounding scenery in a security camera feed.

- Object Detection and Classification: Using advanced neural networks like Convolutional Neural Networks (CNNs), the system analyses patterns in the segmented regions to recognize and categorize objects. For example, an AI model deployed in autonomous vehicles identifies road signs, pedestrians or other vehicles to ensure the car can navigate safely.

- Decision Making: Finally, based on identified objects, the system takes action. A self-driving car, for instance, might detect a stop sign and decide to apply the brakes, ensuring compliance with traffic rules.

This step-by-step process should help you understand how computer vision transforms raw visual data into actionable insights, enabling machines to interact intelligently with their environments. Whether it’s recognizing faces, detecting anomalies in medical imaging or assisting in autonomous driving, each step contributes to the overall capability of AI systems to “see” and act.

Examples of applications that use computer vision: Facial recognition, Self-driving cars, Medical imaging, Quality control in manufacturing

Natural Language Processing: teaching machines to understand language

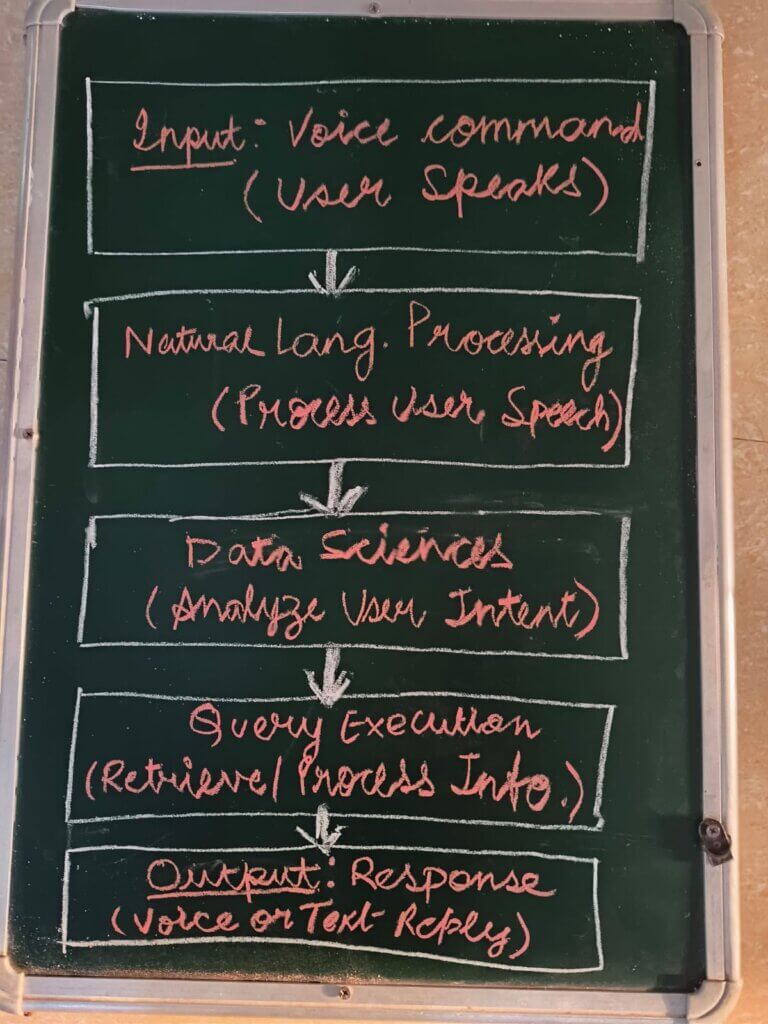

“Hey Siri, play the latest song by Taylor Swift” and boom, the song starts blaring out of the speakers. How does Siri do that? By using natural language processing to process what you spoke and provide relevant responses.

NLP is a machine learning technnique that enables machines to interact with humans via text and speech. Using the NLP technology, machines can understand, interpret and finally generate human language.

How natural language processing works

Natural Language Processing is a complex process involving multiple stages.

Stage 1: Text preprocessing

At this stage, raw language data, i.e. what humans speak or write, is the input. This input is processed in multiple steps to clean and standardize the raw data for efficient processing.

- The first step here is tokenization, where text is broken into smaller units such as words or sentences. For instance, the sentence “I love AI” is tokenized into [“I”, “love”, “AI”]. This helps the system focus on individual components of the text.

- Next, stop word removal eliminates commonly used words like “is”, “the”, “and”, etc. that add little value to the analysis.

- Finally, stemming or lemmatization reduces words to their root form. For instance, “running”, “ran” and “runs” are all reduced to “run”.

Stage 2: Text representation

Following preprocessing, the next step is text representation, where the cleaned text is converted into a format the machine can analyze.

One common approach is the Bag of Words (BoW) model, which represents text as a collection of word occurrences, ignoring the order. For example, the sentence “AI is fun” is represented as {“AI”: 1, “is”: 1, “fun”: 1}.

A more advanced BoW models such as TF-IDF (Term Frequency-Inverse Document Frequency) weigh the importance of words based on how frequently they appear in a document compared to a collection of documents. Words that occur frequently in one document but are rare elsewhere are assigned higher weights, making the representation more insightful for tasks like text classification.

Stage 3: Text understanding

Once the text is represented, the system moves to text understanding, where it extracts meaningful information.

Techniques such as Named Entity Recognition (NER) identify specific entities within the text, like names, dates or locations. For example, the sentence “Mahatma Gandhi was born in Gujrat” is analyzed as {“Person”: “Mahatma Gandhi”, “Location”: “Gujrat”}.

Another method, sentiment analysis, determines the emotional tone of the text — whether it is positive, negative or neutral. For instance, the phrase “The movie was fantastic!” would be classified as positive.

Stage 4: Generating responses/output

Finally, the processed information is passed to machine learning models such as artificial neural networks, k-nearest neighbour (KNN), deep learning models, etc. that predict outcomes or generate responses based on large training datasets. For example, a chatbot might be trained to recognize and answer common customer service queries, such as “What are your working hours?” or “Can I return this item?”

Once the system has made its predictions, it generates an output, translating its machine-readable results into a human-friendly response. For instance, a smart assistant might convert the internal analysis of a weather query into a spoken answer, such as “Today’s weather is sunny with a high of 30°C.”

Example of applications that use NLP: Chatbots, Virtual Assistants, Large Language Models (LLMs), Language Translators

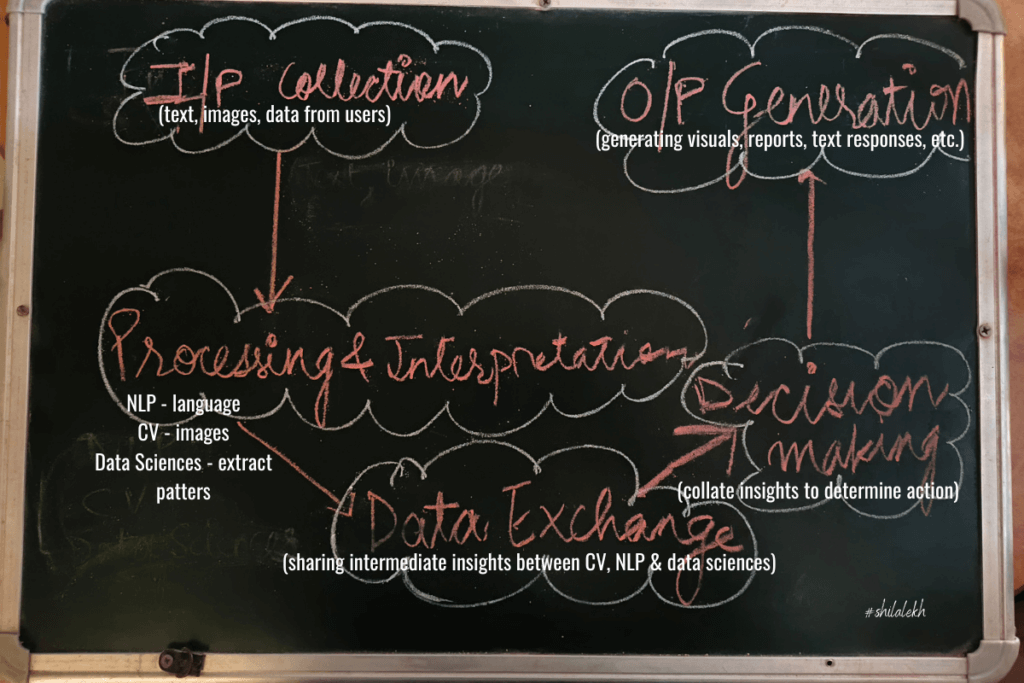

How these domains work together: Integration of data, CV and NLP

The three AI domains — Data Sciences, Computer Vision, and Natural Language Processing — work together to power complex systems and applications.

Key steps in integration

- Input Collection: The system collects input data from users or the environment. Each domain collects data that it is equipped to handle.

- Text or voice input (by NLP).

- Images or videos (by Computer Vision).

- Raw datasets, such as sales data or weather records (by Data models).

- Processing and Interpretation: As mentioned in Step 1, each domain processes its respective input. These processes occur in parallel or sequentially, depending on the application.

- Data Sciences extracts insights from datasets, performs calculations, or predicts trends.

- Computer Vision analyzes visual data to identify objects, patterns or anomalies.

- NLP breaks down natural language input into structured data and analyzes it to determine user intent.

- Data exchange between domains: The outputs from one domain often serve as inputs for another:

- NLP might pass the extracted user query to Data Sciences for further analysis.

- Computer Vision might provide labeled visual data that NLP uses to generate a descriptive text output.

- Data Sciences might supply structured information that Computer Vision visualizes (e.g., heatmaps for a geographic region).

- Decision making: Results from different domains are aggregated to form a coherent response or action. For example,

- Combining NLP’s intent analysis and data models’ weather prediction to answer the query “Will it rain tomorrow?”

- Using computer vision to detect a pedestrian and feeding this info to predict movement using data models in autonomous driving.

- Output generation: The integrated system delivers a final output, tailored to user needs:

- Spoken or written response (via NLP).

- A visual map or chart (via Computer Vision).

- A detailed analytical report (via Data Sciences).

Why integration is important

- Bridging gaps between data types: Natural language, images and datasets are fundamentally different types of information. Integration allows these diverse inputs to work together for a single purpose. For example, a weather assistant uses language input, database analysis and graphical maps to give a complete response.

- Building context-aware systems: Integration ensures that systems understand the context better by combining insights from multiple perspectives. For example, a smart assistant not only answers “What’s the weather?” but also interprets “Should I take an umbrella?” by considering weather predictions and user location.

- Scalability and efficiency: Integration optimizes resource usage. As opposed to an isolated systems, a unified model performs multiple tasks efficiently. For example, autonomous vehicles rely on CV for navigation, Data Sciences for traffic predictions and NLP for passenger commands, all running simultaneously.

Advantages of integration

Integration of these three domains — data, CV and NLP — provides these advantages:

- Enhanced Accuracy: Combining insights from multiple domains leads to better predictions and responses.

- Scalability: Unified AI systems can adapt to handle complex, multi-step processes as opposed to if they were working alone.

- User-Centric Experience: Users receive tailored and context-aware solutions, improving satisfaction.

Detailed example: autonomous vehicles

As you know, autonomous vehicles are a work in progress. AI systems are working on enhancing road safety through more accurate real-time decision-making. They can also facilitate reduced commute times with dynamic route adjustments, one step agead of what Google Maps does.

Let us see how these three domains come together in automous vehicles.

- Input:

- A camera captures real-time video of the road (Computer Vision).

- Sensors and databases provide traffic, weather, and GPS data (Data Sciences).

- Passengers issue verbal instructions like “Take me home” (NLP).

- Processing:

- CV identifies objects such as cars, pedestrians, and stop signs.

- Data Sciences predicts traffic congestion and calculates optimal routes.

- NLP interprets the passenger’s command and translates it into specific actions.

- Integration:

- The navigation system combines the optimal route (from data sciences) with real-time obstacle detection (from CV).

- It ensures the car follows the safest path, adhering to traffic rules, while considering the user’s destination.

- Output:

- The car starts driving toward the intended destination, providing updates like “expected time of arrival is 15 minutes” (via NLP).

More real world examples

Here are some more examples of how AI models integrate data, CV and NLP.

AI in healthcare

AI is revolutionizing healthcare by making diagnoses faster and more accurate, improving patient outcomes. Let us take the example of disease diagnosis:

- NLP processes patient descriptions of symptoms. It would analyze the description to identify conditions like “chronic cough” or “shortness of breath.”

- Data Sciences analyzes historical patient data and current research to suggest likely conditions. Say it predicts diseases like diabetes using patient lifestyle and medical history data.

- Computer Vision analyzes X-rays, CT scans or MRI images for abnormalities. Numerous studies have established that AI systems detect tumors or fractures with higher precision than manual inspections.

The overall impact of this seamless integration is early and accurate detection of diseases. Also, customized treatment plans can be created quickly using patient-specific data.

AI in education

AI can transform education by creating personalized learning experiences. Remember, current AI systems cannot replace human tutors but support them in numerous ways to make both teaching and learning a joy.

- NLP can understand student queries and provides relevant answers. So after the concept has been rayght by teachers, AI tutors can help students revise and repeat the concepts. Even give them tests and quizzes to gather how much they have understood and create a customized tutoring plan.

- Data sciences can track student progress via test and quiz scores, and suggests improvements by identifying topics where a student struggles.

- Computer vision can recognize facial expressions to assess engagement levels. If the system detecting boredom or confusion during online lessons, it can change its tactics accordingly.

Using intelligent AI tutors can help teachers create tailored lessons based on individual needs. From my experience with teaching I can confidently predict that the students would engage better in this environment because no one (the teacher or peers) would be judging them while receiving real-time feedback.

AI in e-commerce

Remember the product suggestions provided by shopping apps such as Amazon, Myntra and Big Basket the moment you search for a product? That’s AI at work. AI enhances the online shopping experience by predicting user preferences and improving operational efficiency.

Let us continue with the example of product recommendation systems.

- NLP: Understands user reviews and feedback to assess product sentiment. It then highlights highly-rated products to customers based on reviews and testimonials.

- Data sciences analyzes purchase history to recommend products. Like suggesting running shoes after a customer buys sportswear.

- Computer vision can be used to supports image-based searches, allowing users to upload a photo and find visually similar products. Also, CV can help offer virtual trial rooms to the customers.

The two most critical impact of product recommendation systems is increased sales through personalized suggestions and improved customer satisfaction due to better product discovery.

Conclusion

Artificial Intelligence is more than just the shining new toy in the market. It is the latest technology that is re-shaping our world. Very much like any new invention did in its age. How steam engines revolutionized the economy as well as the way people travelled, . The way computers revolutionized the way people worked and performed research and conducted business. Or how UPI revolutionized the world of finance. As students ready to take on the real world in a few years’ time, you must broadly understand how AI works, so that you can understand why it has the potential to impact our lives in varied ways.

Recap of Key Points

- Data Sciences helps us analyze and interpret large datasets to uncover meaningful insights.

- Computer Vision enables machines to understand visual data and make decisions based on it.

- Natural Language Processing bridges the gap between human communication and computer systems.

- Together, these domains power intelligent systems that can respond to diverse inputs and solve complex problems, such as in healthcare, education, e-commerce, and autonomous vehicles.

Explore AI on your own

As students, you have the unique opportunity to not only understand AI but also to contribute to its development. Whether it’s participating in hackathons, joining AI communities or pursuing interdisciplinary learning, the first step is curiosity.

Start asking questions, experimenting with tools and imagining solutions to problems around you.

You can start by asking your doubts in comments here. Or AI tool recommendations.